Autonomous cars can only understand the real world through a map

In the second of our series "3 steps to automation", we look at the need for a precise and trustworthy map that can be ‘read’ by the car.

As we’ve seen in the first in the series, however numerous and multifarious, sensors do not make for automated cars alone. This is why HERE is developing an automated driving cloud that can work in conjunction with sensors to create bona fide self-driving cars.

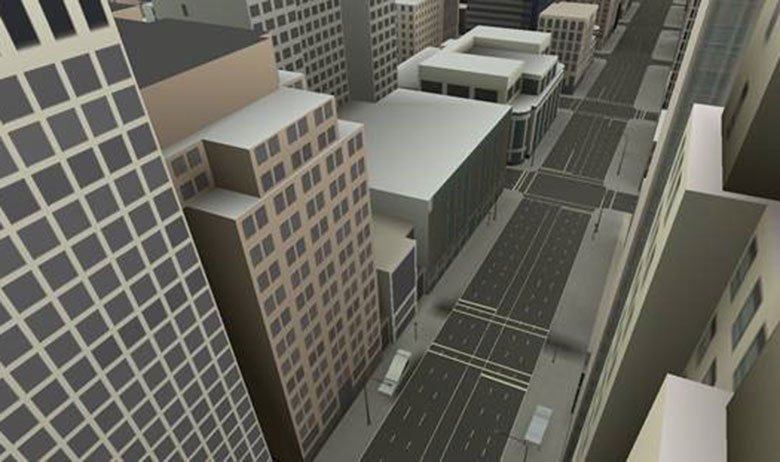

One aspect of this is the creation of ultra-precise, high-definition maps that, through the cloud, can map an area down to the centimeter and constantly feed new and relevant information to the vehicle as it makes its automated journey from A to B.

Why autonomous cars need a highly precise map

An HD map is needed not only to allow a vehicle to precisely position itself laterally and longitudinally but to enable the car to maneuver correctly. While sensors on autonomous cars can see out around 100 meters, a car traveling at 80 mph only has a sensing horizon of 3 seconds. Any deployment will need to rely on connectivity to provide what HERE calls "extended sensor" or cloud services helping cars extend sensor range and "peek" around the corner.

This is better explained with a few real-life examples.

Consider a scenario where a highly automated car is behind a slower truck on a motorway and wishes to overtake it. The highly automated car must be able to answer several questions: is there another lane that it could move into? Are there legal restrictions preventing vehicles from overtaking or driving in the other lane? Is the lane wide enough? Is the stretch of road needed to complete the maneuver long enough so that the car can pass before the lane configuration changes?

When you consider how much information is needed to make these maneuvers, you realize how richly detailed a map of this kind needs to be.

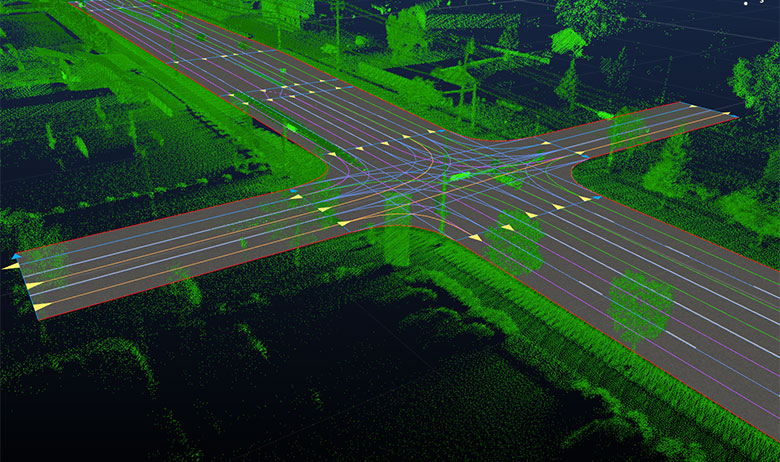

Such a lane model not only needs to have precise lane geometry with lane boundaries but also rich attribution such as lane traversal information, lane types, lane marking types and lane level speed limit information. A sensor’s reach is limited. GPS will put cars within meters at best. This coupled with obstructions means that in certain situations sensors are unable to detect this information.

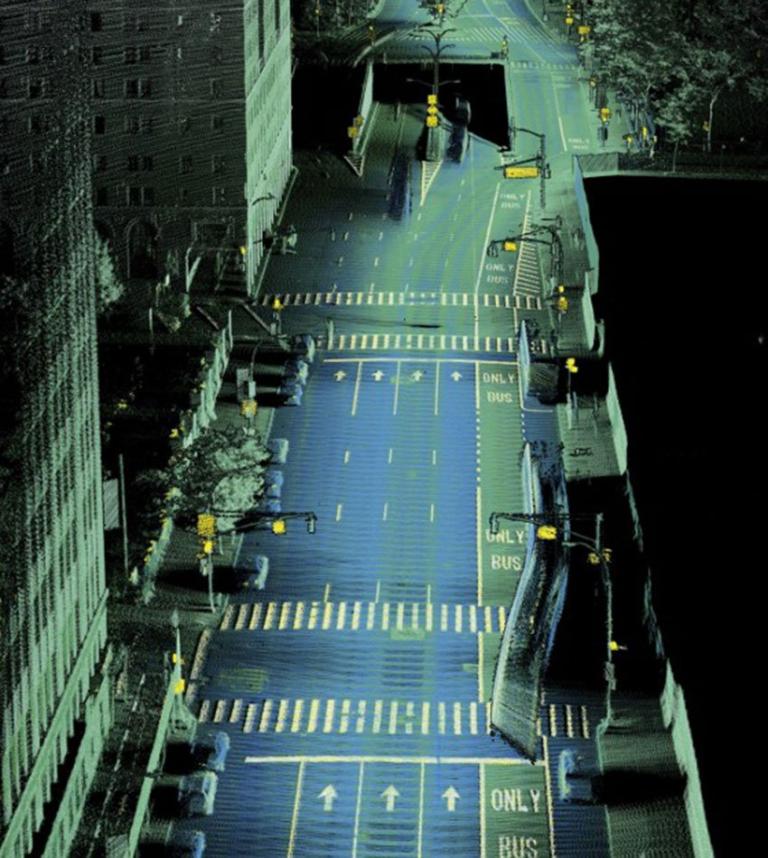

HERE has already started to collect this richly detailed information and one way it is doing so is by using a technique called LiDAR which we explained in an earlier post. HERE cars equipped with LiDAR and imagery tech are currently out and about on roads across the globe in order to capture the world in 3D.

After processing the data, the result is a 3D representation of the street environment that bears a very close resemblance to its real-world counterpart, with accuracy processed to a level of 10-20cm - a level of map precision we consider to be adequate for the purposes of highly automated driving.

A map as a natural canvas for sensor data

One could argue that although sensors currently have limited reach, they’re evolving quite fast and could potentially fill the gap soon and replace the need of an HD map.

The truth is that the HD map could be also considered as a sensor, with a potential infinite reach. But most importantly we could say that the map is the “natural canvas” where to store all the data coming from the car sensors and from the cloud so that a car can read them. It is the interface between the real world and the autonomous vehicle.

Unlike infotainment maps, HD maps provide more information at a higher fidelity for attributes such as roadside barriers or off-ramps. While autonomous cars are indeed well equipped with sensing data, they are recognizing the road for the first time. The HD map is able to provide context. Sensors have no “memory” for roads: Is a lane divider knocked down? Has a tree fallen into the street?

The real world is developing and changing all the time. The fact that HERE currently makes around 2.7 million changes to its global map database every day illustrates just how rapid and numerous the changes are. And while it makes life easier to have an up-to-date map for simple navigation, it is downright essential for safe autonomous driving.

A vehicle not only needs to be able to localize itself but to be aware of and respond to events occurring on the road. With this in mind, a map needs to be updated second by second in order to serve this purpose. Hence a constant validation and update mechanism needs to be in place, with vehicles having the ability to share and validate information about the road network with HERE Cloud, where data gets constantly aggregated and fed back as coherent updates to the underlying map, the canvas, which the car can understand.

This is where the cloud comes into play and by dividing the world into small spatial partitions it means that a particular car is able to receive relevant data with near real-time speed without overloading the system. After all, there is no need for a car to receive an HD map of a whole country or continent, rather it needs up-to-date information of its immediate environment.

Stay tuned for the last piece in this series, where we will look at the need to humanize the driving experience in order to make automated driving feel natural and comfortable - something that driverless cars need to accommodate if they are to become commercially viable products.

Three things from HERE that will make autonomous cars a reality |

| 1. HD Map – because autonomous cars can only understand the real world through a map |

| 2. Live Roads – because autonomous cars have to see around the corner |

| 3. Humanized Driving – because autonomous cars have to make passengers feel relaxed and comfortable |

Have your say

Sign up for our newsletter

Why sign up:

- Latest offers and discounts

- Tailored content delivered weekly

- Exclusive events

- One click to unsubscribe