The Moral Machine tests the ethics behind self-driving cars

Human drivers make judgement calls on a daily basis, and in the same scenarios autonomous cars will need to react, too. That means programming machines to pick the best possible outcome, but what happens when it comes down to an ethical choice? In a bid to understand the way machines will need to respond and to help gather a human perspective, a team at MIT have developed the Moral Machine.

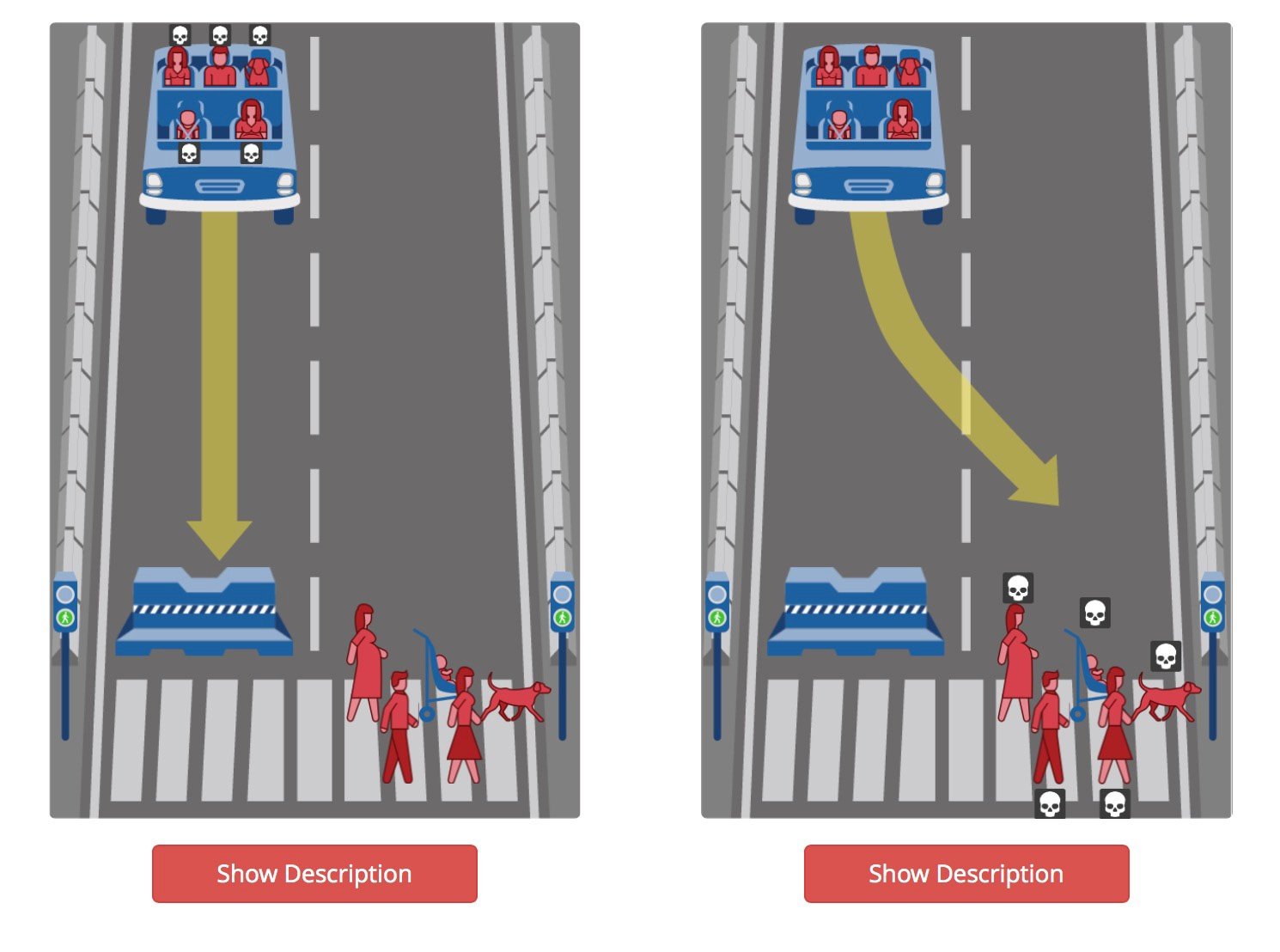

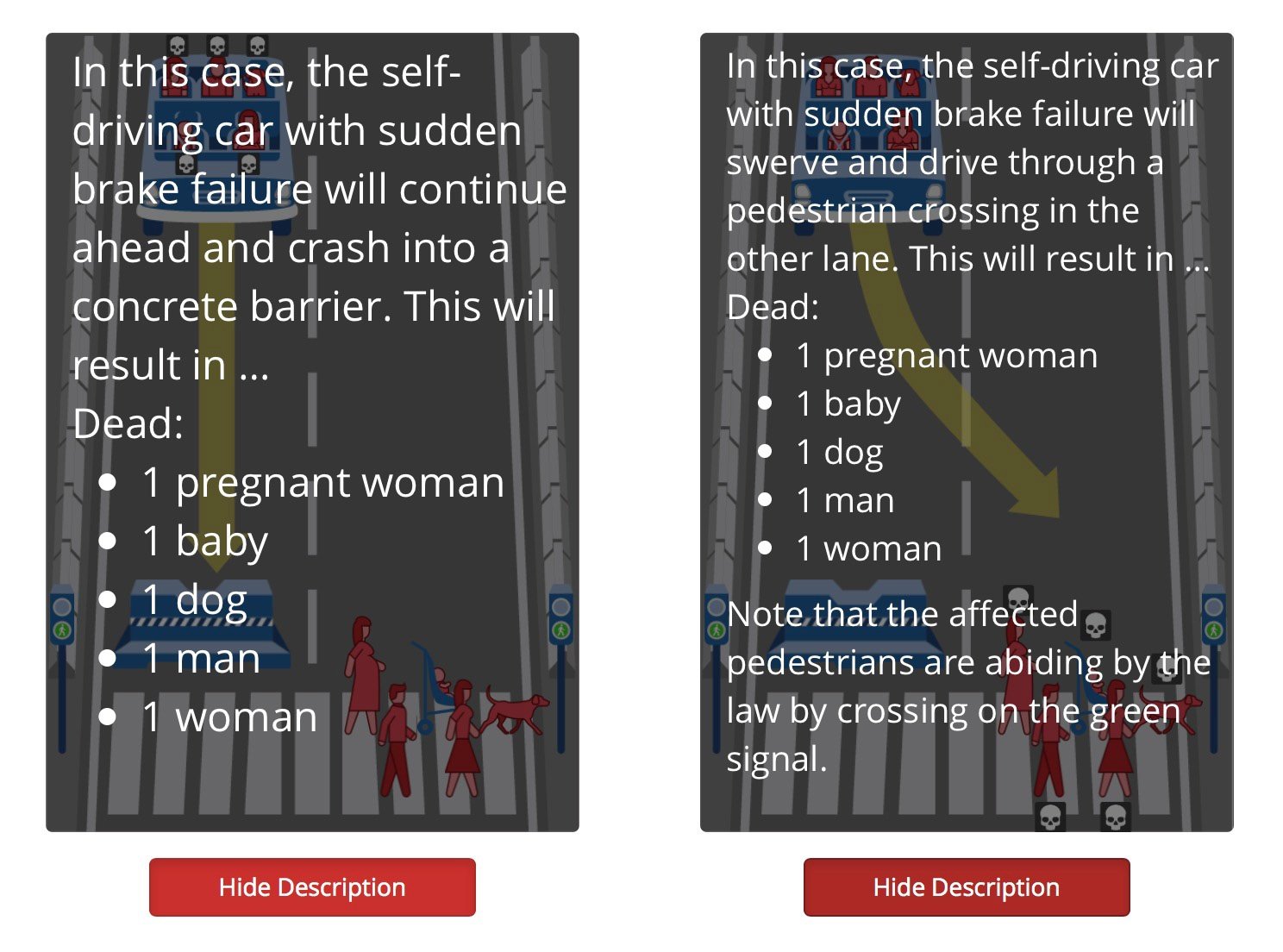

The Moral Machine highlights the ethical dilemmas thrown up when autonomous cars need to choose between two evils. As a driver, you’d make a judgement call, but are your choices the same as other people’s? Moral Machine lets you judge the best possible outcome and compare your responses with others’: the results may well surprise you.

We spoke to Sohan Dsouza, a student at MIT who helped to develop the Moral Machine, to find out more.

“We had already been doing some research on the ethics of self-driving vehicles in simpler ‘unavoidable harm’ scenarios, and we wanted to build a platform that could generate more complex ones for public assessment, both to provoke public dialogue and to build a crowd-sourced picture of how humans perceive moral decisions made by intelligent machine agents.”

Those scenarios revolve around crashes, and what the car should choose to hit in an unavoidable accident. Should the car swerve into a barrier, killing its passenger, to save the lives of five pedestrians, for example? Or should the car put the life of the driver first? What happens if there’s a dog, an old person or a child? The Moral Machine throws up a whole host of scenarios, and you can even create your own.

“The scenarios are randomly generated within a set of constraints,” explains Sohan. “The variables include classic trolley problem parameters like numbers of people on each side of the moral equation, and autonomous vehicle parameters like positioning the characters as passengers in the car or as pedestrians on the road. The other factors have been shown to be of consequence in psychological studies conducted in other contexts, and we want to find out if such biases exist in our scenarios as well.”

The Moral Machine is interesting, as there’s no objectively correct response to any of the scenarios, so your own judgement may differ greatly with that of other people or autonomous cars.

“Unavoidable harm dilemmas – such as the classic trolley problems – are fundamentally hard to solve to everyone’s satisfaction,” says Sohan. “Professional philosophers and ethicists have been wrestling with them for years, and framing them in the context that includes an inorganic decision-maker and characters of unequal status with relation to it, only make them harder to solve.”

“What we do know is that self-driving cars are practically inevitable now, and will have to be actively programmed one way or another. Hence, the need to understand the broad perspectives and get the conversation started on the problem, as the Moral Machine was designed to do.”

With a huge number of randomly generated scenarios, Sohan points out that data is still being analysed and the Moral Machine platform further refined. “However, preliminary analysis looking at aggregates reveals what appear to be broad cultural differences between countries in the extent to which they prioritise casualty-count minimisation and passenger status.”

Unequal status plays a big part in the Moral Machine, and Sohan explains that factors including age, fitness and gender have been shown to affect people’s views of moral equations in previous psychological studies.

“We have included them among the variables in Moral Machine scenarios to find out if they make any difference in the context of machine ethics in moral dilemmas. We do not intend to suggest that these should play any role in moral decision-making for self-driving cars.”

While the intention may not be to initially influence how autonomous cars think, the Moral Machine can help to drive understanding of the choices that machines will have to make. Sohan adds:

“The understanding of what people consider morally acceptable trade-offs and their perspectives on machine ethics can certainly contribute immensely to the academic study of ethics and moral psychology. The data can serve as a source of ground truth for the theorists, especially considering the size of the dataset: tens of millions of scenarios assessed by millions of users from countries around the world.”

What that means for the wider automotive industry remains to be seen, but, if nothing else, Sohan hopes the Moral Machine will spark debate about the ethics involved in autonomous technology:

“It is too early to say whether it will be put to use in programming actual driverless cars. Whether or not it happens and the extent to which it happens will, in any case, depend on the outcome of dialogue among lawmakers, car manufacturers, insurers, drivers, and the public. Provoking such dialogue is one of our main goals in creating the Moral Machine.”

Interested in finding out more? Check out the Moral Machine to see what choices you’d make.

Have your say

Sign up for our newsletter

Why sign up:

- Latest offers and discounts

- Tailored content delivered weekly

- Exclusive events

- One click to unsubscribe