HERE Workspace & Marketplace 2.12 release

Highlights

Connect devices directly to the Open Location Platform with the SDK for C++ and SDK for TypeScript

Two open-source SDKs are available to connect devices directly to OLP:

- The SDK for C++ enables you to connect devices like smartphones, IoT robots and cars, facilitating read/write data access, authentication and error handling on connected devices. With this release you are able to use cached map data without connectivity.

- The SDK for TypeScript enables read access to data in OLP from your web applications and connected devices. This SDK release supports read access to Versioned, Volatile and Index layers.

Both SDKs are open-source projects and can be found on GitHub here: C++ and here: TypeScript. More information about both SDKs can be found here: C++ and here: TypeScript.

Access additional HERE Map Content - Navigation Attributes via the Optimized Map for Location Library

The following navigation attributes from the HERE Map Content catalog are now available: Road Divider, Road Usage, Speed Category, Low Mobility, Local Road, Lane Count, Lane Category and Intersection Internal. We will continue to iteratively compile HERE Map Content attributes into the Optimized Map for Location Library enabling fast and simplified direct access to these attributes via the Location Library. For more detail on the added attributes, please see: HERE Map Content- Navigation Attributes layer

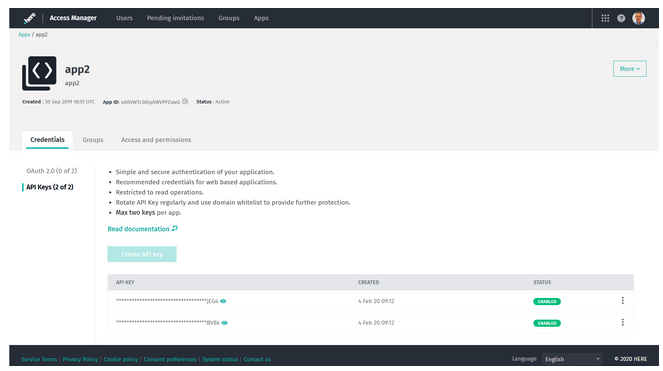

Generate API Key credentials directly in OLP

The Platform Access Manager now enables you to create API Key credentials, the recommended credential type to use for web based applications. With this addition, you can generate both OAuth 2.0 token and API Key credentials for each unique APP ID. API Key credentials provide for simple and secure authentication of your application. Utilize the API Key credentials key-rotation feature to ensure continued security of your application over time. This is done by creating a second API Key for your application and deleting the original key when it is no longer required. You can use a maximum of two API Keys at once for each application.

At this time, there are two Platform Location Services that support authentication with API Key credentials (this support is not yet available in China). Using these two examples, you can substitute the API Key credential generated in Platform Access Manager for the "API_KEY_HERE" placeholder text:

- Routing

- https://router.hereapi.com/v1/routes?apikey=API_KEY_HERE&destination=52.490613,13.418212&origin=52.535326,13.384566&return=polyline,actions,instructions,summary&transportMode=car

- Rendering - Vector Tiles

- https://vector.hereapi.com/v2/vectortiles/base/mc/11/1100/673/omv?apikey=API_KEY_HERE

Support for additional Platform Location Services as well as support of those services in China will be announced at a later time.

Changes, Additions and Known Issues

SDK for Java and Scala

Changed: Continued documentation improvements have been made for Java and Scala developers. With this release, the experience is further streamlined by merging this documentation with the tutorials. Detailed dependency information has also been added for the SDK as well as the Pipeline Environments.

Changed: The Data Visualization Library has been renamed to Data Inspector Library to better align it with the naming of the Inspect tab of the Data section in the Portal UI.

Also, this TypeScript-based Library is separated from the OLP SDK for Java and Scala. Java and Scala developers might use the Library for visual debugging of their pipeline apps - mostly though directly via the application in the Portal UI. At the same time, other audiences can create their customized Data Inspector app to allow users without Platform access to review Platform data through a hosted service.

To read about more updates to the SDK for Java and Scala, please visit the HERE Open Location Platform Changelog.

Web & Portal

Issue: The custom run-time configuration for a Pipeline Version has a limit of 64 characters for the property name and 255 characters for the value.

Workaround: For the property name, you can define a shorter name in the configuration and map that to the actual, longer name within the pipeline code. For the property value, you must stay within the limitation.

Issue: Pipeline Templates can't be deleted from the Portal UI.

Workaround: Use the CLI or API to delete Pipeline Templates.

Issue: In the Portal, new jobs and operations are not automatically added to the list of jobs and operations for a pipeline version while the list is open for viewing.

Workaround: Refresh the Jobs and Operations pages to see the latest job or operation in the list.

Projects & Access Management

Issue: A finite number of access tokens (~ 250) are available for each app or user. Depending on the number of resources included, this number may be smaller.

Workaround: Create a new app or user if you reach the limitation.

Issue: Only a finite number of permissions are allowed for each app or user in the system across all services. It will be reduced depending on the inclusion of resources and types of permissions.

Issue: All users and apps in a group are granted permissions to perform all actions on any pipeline associated with that group. There is no support for users or apps with limited permissions. For example, you cannot have a reduced role that can only view pipeline status, but not start and stop a pipeline.

Workaround: Limit the users in a pipeline's group to only those users who should have full control over the pipeline.

Issue: When updating permissions, it can take up to an hour for changes to take effect.

Issue: Projects and all resources in a Project are designed for use only in Workspace and are unavailable for use in Marketplace. For example, a catalog created in a Platform Project can only be used in that Project. It cannot be marked as "Marketplace ready" and cannot be listed in the Marketplace.

Workaround: Do not create catalogs in a Project when they are intended for use in both Workspace and Marketplace.

Data

Added: Three new layers of content have been added to the HERE Map Content catalog (hrn:here:data::olp-here:rib-2); the layers include Postal Area Boundaries, Postal Code Points and Electric Vehicle Charging Stations.

Fixed: Resolved an issue found post production release to support backward compatibility of Catalog version dependency HRN comparisons (used in version resolution). With the rollout of the new Catalog HRN format including OrgID (i.e. realm) in OLP 2.9, the Catalog dependency version resolution function would not successfully find compatible versions when the dependencies contain a mix of Catalog HRNs using old and new HRN formats. To address this issue, the following changes were made globally after the OLP 2.10 release:

- Metadata API (in "Gets catalog versions" in https://developer.here.com/olp/documentation/data-api/api-reference-metadata.html) will return dependency HRNs in old and new formats at the same time for all historical and newly committed Catalog versions.

Deprecated: Catalog HRNs without OrgID will no longer be supported in any way after July 31, 2020.

- Referencing catalogs and all other interactions with REST APIs using the old HRN format without OrgID OR by CatalogID will stop working after July 31, 2020,

- Please ensure all HRN references in your code are updated to use Catalog HRNs with OrgID before July 31, 2020 so your workflows continue to work.

- HRN duplication to ensure backward compatibility of Catalog version dependencies resolution will no longer be supported after July 31, 2020.

- Examples of old and new Catalog HRN formats:

- Old (without OrgID/realm): hrn:here:data:::my-catalog

- New (with OrgID/realm): hrn:here:data::OrgID:my-catalog

Issue: The changes released with 2.9 (RoW) and with 2.10 (China) to add OrgID to Catalog HRNs and with 2.10 (Global) to add OrgID to Schema HRNs could impact any use case (CI/CD or other) where comparisons are performed between HRNs used by various workflow dependencies. For example, requests to compare HRNs that a pipeline is using vs what a Group, User or App has permissions to will result in errors if the comparison is expecting results to match the old HRN construct. With this change, Data APIs will return only the new HRN construct which includes the OrgID (e.g. olp-here…) so a comparison between the old HRN and the new HRN will be unsuccessful.

- Reading from and writing to Catalogs using old HRNs is not broken and will continue to work until July 31, 2020.

- Referencing old Schema HRNs is not broken and will work into perpetuity.

Workaround: Update any workflows comparing HRNs to perform the comparison against the new HRN construct, including OrgID.

Issue: Versions of the Data Client Library prior to 2.9 did not compress or decompress data correctly per configurations set in Stream layers. We changed this behavior in 2.9 to strictly adhere to the compression setting in the Stream layer configuration but when doing so, we broke backward compatibility wherein data ingested and consumed via different Data Client Library versions will likely fail. The Data Client LIbrary will throw an exception and, depending upon how your application handles this exception, could lead to an application crash or downstream processing failure. This adverse behavior is due to inconsistent compression and decompression of the data driven by the different Data Client Library versions. 2.10 introduces more tolerant behavior which correctly detects if stream data is compressed and handles it correctly.

Workaround: In the case where you are using compressed Stream layers and streaming messages smaller than 2MB, use the 2.8 SDK until you have confirmed that all of your customers are using at least the 2.10 SDK where this Data Client Library issue is resolved, then upgrade to the 2.10 version for the writing aspects of your workflow.

Issue: Searching for a schema in the Portal using the old HRN construct will return only the latest version of the schema. The Portal will currently not show older versions tied to the old HRN.

Workaround: Search for schemas using the new HRN construct OR lookup older versions of schemas by old HRN construct using the OLP CLI.

Issue: Visualization of Index layer data is not yet supported.

Pipelines

Issue: A pipeline failure or exception can sometimes take several minutes to respond.

Issue: Pipelines can still be activated after a catalog is deleted.

Workaround: The pipeline will fail when it starts running and will show an error message about the missing catalog. Re-check the missing catalog or use a different catalog.

Issue: If several pipelines are consuming data from the same Stream layer and belong to the same Group (pipeline permissions are managed via a Group), then each of those pipelines will only receive a subset of the messages from the stream. This is because, by default, the pipelines share the same Application ID.

Workaround: Use the Data Client Library to configure your pipelines to consume from a single stream: If your pipelines/applications use the Direct Kafka connector, you can specify a Kafka Consumer Group ID per pipeline/application. If the Kafka consumer group IDs are unique, the pipelines/applications will be able to consume all the messages from the stream.

If your pipelines use the HTTP connector, we recommend you to create a new Group for each pipeline/application, each with its own Application ID.

Issue: The Pipeline Status Dashboard in Grafana can be edited by users. Any changes made by the user will be lost when updates are published in future releases because users will not be able to edit the dashboard in a future release.

Workaround: Duplicate the dashboard or create a new dashboard.

Issue: For Stream pipeline versions running with the high-availability mode, in a rare scenario, the selection of the primary Job Manager fails.

Workaround: Restart the stream pipeline.

Deprecated: The Batch-2.0.0 run-time environment for Batch pipelines is now deprecated. We recommend that you migrate your Batch Pipelines to the Batch-2.1.0 run-time environment. See the Migration section in the Pipelines Developer Guide.

Location Services

Issue: Lack of usage reporting for Location Services released in version 2.10 (Routing, Search, Transit, and Vector Tiles Service)

Workaround: Usage is being tracked at the service level. Following the 2.12 release wherein usage reporting is expected to be in place, customers may request usage summaries for usage incurred between the 2.10 release and 2.12.

Marketplace (Not available in China)

Issue: Users do not receive stream data usage metrics when reading or writing data from Kafka Direct.

Workaround: When writing data into a Stream layer, you must use the Ingest API to receive usage metrics. When reading data, you must use the Data Client Library, configured to use the HTTP connector type, to receive usage metrics and read data from a Stream layer.

Issue: When the Technical Accounting component is busy, the server can lose usage metrics.

Workaround: If you suspect you are losing usage metrics, contact HERE technical support for assistance rerunning queries and validating data.

Issue: Projects and all resources in a Project are designed for use only in Workspace and are unavailable for use in Marketplace. For example, a catalog created in a Platform Project can only be used in that Project. It cannot be marked as "Marketplace ready" and cannot be listed in the Marketplace.

Workaround: Do not create catalogs in a Project when they are intended for use in the Marketplace.

SDK for Python

Added: You can now use the SDK for Java and Scala with Jupyter notebooks on AWS EMR environments.

Added: The spark-ds-connector has been replaced with the latest SDK for Java and Scala Spark Connector. This supports both read and write capabilities using Scala within Jupyter notebooks. Sample notebooks have been updated with this latest Spark Connector.

Deprecated: The spark-ds-connector has been deprecated. Please upgrade to the latest SDK for Python version which includes the latest SDK for Java and Scala Spark Connector and updated sample notebooks. Instructions for how to upgrade are in the Update to Latest SDK section of the SDK for Python Setup Guide.

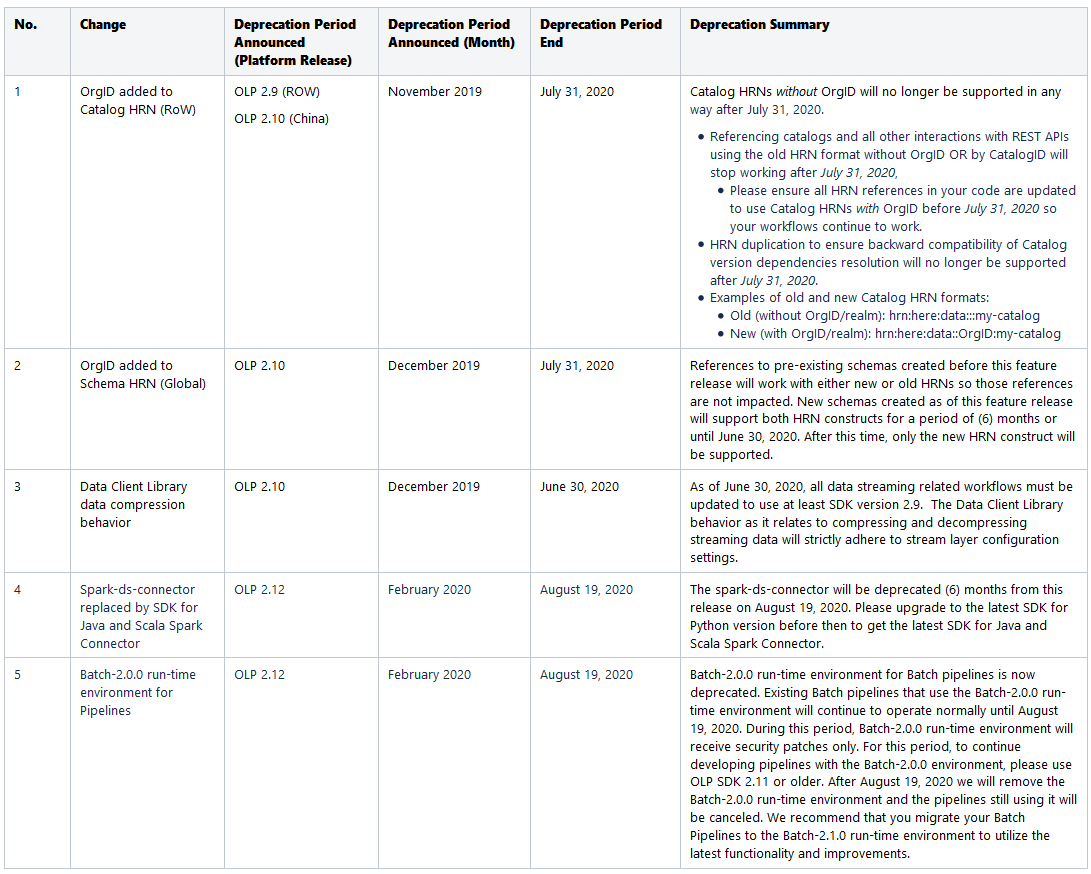

Summary of active deprecation notices across all components

Have your say

Sign up for our newsletter

Why sign up:

- Latest offers and discounts

- Tailored content delivered weekly

- Exclusive events

- One click to unsubscribe